Another cold and frosty December, and apart from the start of what my parents would call 'proper winter', that can only mean one thing; HPE Discover at the ExCel centre in London.

Hewlett Packard Enterprise (HPE) always has something new to offer at their flagship technology showcase and this year was no exception. In this blog, I focus on the key pieces of technology news from the conference that clearly roadmap HPE's strategy for the next 12 to 18 months.

Cloud

HPE's strategy on this couldn't be clearer. The first wave of customers to go 'all-in' to Cloud are starting to pull back a little, with costs not being quite what was promised, data sovereignty still being an issue, and the performance or latency being just out of reach. HPE have thought about this long and hard and fully envisage a hybrid infrastructure that utilises a three-way split of a customer's applications. In their view; roughly 20% will be born in the cloud applications, making use of hyper-scale elastic architectures or requiring global presence; 20% will be SaaS from the likes of Salesforce, Dropbox, Office 365; and the remaining 60% will be the more traditional on-premise Enterprise applications, which need to be based closer to home for cost or performance reasons. Obviously, those percentages scale considerably based on your business model but what is true regardless, is that HPE want you to think of them first when you're thinking about the large percentage that's staying in the Datacentre. What's increasingly clear from customers is that they really like the Cloud consumption model of infrastructure. Workloads up in seconds, on a pay-as-you-go basis that's easy to consume.

HPE already have a proposition with Amazon Web Services (AWS) in the form of Eucalyptus and announced at the conference the next release of bringing Azure to the datacentre, in the form of Azure Stack. This gives HPE a neat tie in to those customers who want to explore porting workloads between private and public cloud, or just want a platform to build a cloud-ready application in an environment that they've still got full control over. Azure Stack promises to bring a whole host of business benefits but details of exactly how were reasonably light. Watch this space. In my honest opinion, I don't foresee many people wanting to build an on-premise piece of public cloud, even if it's just for data migration purposes. I'd guess that it's far more likely customers will position workloads where they're most appropriate, in the same way they've been doing for years with spinning disk and flash storage or bare-metal installs over virtualisation.

Synergy

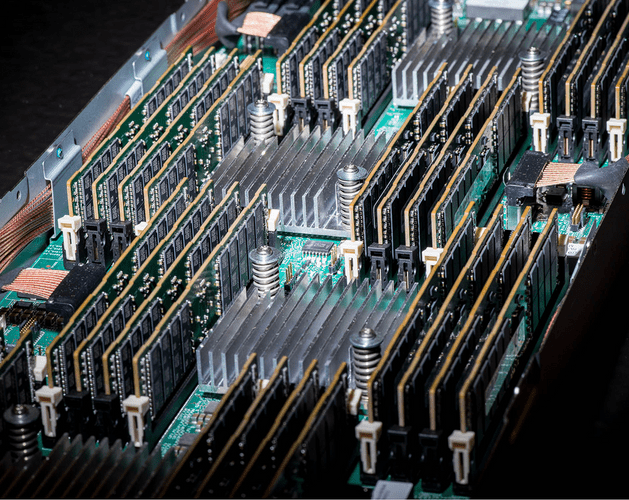

This year's Discover conference highlighted where Synergy is going to fit in HPE's portfolio. Synergy takes HPE's expertise in high-density, cost-effective compute, storage and networking stacks (aka BladeSystem), their experience in creating Private Clouds with the Helion CloudSystem software stack, OneView from a management perspective and the feedback from customers on how they want to consume infrastructure and blends them all together in a single product.

Synergy allows a customer to take compute, storage and networking in any combination across a number of frames and using OneView templates provisions infrastructure in seconds. The secret to this comes in two parts. The first is the Composer, for all intents and purposes, a dedicated OneView management appliance. The second is the Image Streamer which contains all the images for VM's you'd want to create, containers you'd want to swarm or physical servers you'd wish to deploy.

Synergy is primarily aimed at those customers who build and tear down machines a lot whilst developing or iterating new software, or that are constantly changing the size and scale of workloads. In short, customers who would have traditionally looked at Cloud. Synergy gives the opportunity to build a highly scalable and reactive platform to enable business agility that only cloud has been able to offer before. Finally, like with cloud, there is a whole ecosystem springing up to work with Synergy based on the availability of a single API; Puppet, Chef, VMware, OpenStack, Docker and Redhat are all already involved.

The Machine

Talking of Synergy leads me neatly into 'the Machine'. HPE have been talking about the Machine for ages, and it's finally here (well, running in the lab). The Machine is HPE's 'Compute 2.0' if you will. I was lucky enough to see Kirk Bresniker, HPE labs Chief Architect explain why the Machine is so important at the last HPE CTO conference. Since 1965 we've seen a doubling of transistors on a processor every 18 months in what's termed 'Moore's Law'. The challenge with this is we're reaching the limits of what we can physically do with silicon before we start running into problems because the transistors and spaces between them are approaching the size of atoms. Compound this with the fact that the amount of created data is exploding at an unprecedented rate (around 2.5 Exabyte's per day – or around 90 years of HD video). On top of that we're reaching the limits of how many electrons we can physically jam down a copper trace, and lastly we could really do with memory like speeds for storage that's actually persistent.

Enter the Machine.

The Machine takes on a number of the challenges facing modern computing architecture and neatly fixes a bunch of them. First up is Photonics. Photons are substantially faster than electrons, so by coupling processors and memory together using fibre rather than copper we can gain massive performance boosts. Next is swapping flash or spinning disk for persistent memory. Memory is orders of magnitude faster than magnetic media and once either HPE's Memristor or Intel's 3D xPoint can be connected to the processors with light we have a shot at building hugely scalable, hyper performance platforms capable of tackling the issue with processor growth and the explosion of storage in one fell swoop. Best of all? Although this stuff is all in the lab at the moment, given time it's all capable of being integrated into a Synergy frame.

IOT, the 'Edge' and Big Data

Although these are separate areas and technologies, they impact each other. Let's start with IOT. The theory has been around for ages and products are just starting to come to market in a useful way now. The premise is to connect normally innate objects to the internet to make things 'smart', or buy things with internet capability built in order to do more useful functions. One example is my Amazon Echo paired with a Phillips Bridge. I can now wirelessly, from my phone, turn on my lights whilst I'm away from home, or get Alexa (the Echo's 'personality') to check my commute or the next train time. The possibilities are endless and awesome.

Thinking with a more business slant, IOT can be useful in hundreds of different ways. Take the automotive industry for example, HPE decked out a BMW i3 in an IOT capacity, collecting metrics about the weather, driving conditions, fuel consumption, whilst also giving the driver access to traffic reports and music. Considering the millions of cars and tens of millions of journeys, the data collected could be used to build better cars, give a better driving experience or create a safer drive for other road users. All that data usually needs to be transmitted and analysed somewhere, but actually that's where 'the Edge' part of this comes in. HPE has a number of compute products that can do server-grade analysis and feedback on IOT type data in real-time, at the location where it was recorded; giving instant feedback on gathered data. Pretty cool, eh? If deeper analysis is required or the amount of data gathered is too much for an Edgeline server then 'Big Data' steps up to the plate. Big Data takes huge data sets and looks for patterns and trends, and analyses them to provide useful information and HPE have a wealth of collection methods (Aruba!) and analysis tools (the old HAVEn platform – Hadoop, Autonomy, Vertica etc.).

Get in touch

To find out how we can help you with any HPE requirements contact your Softcat account manager or get in touch using the button below.